4. The ARFLEX platform and the multi-hierarchical control architecture

7. The ARFLEX simulator and the real-time prototyping environments

Introduction

The ARFLEX project (Adaptive Robot for FLEXible manufacturing systems - IST-NMP2-016680) has been funded by the European Community in the context of the Six Programme Frame with the aim to introduce a radical innovation to the existing industrial robots by means of the most advanced technologies in control theory, embedded systems, sensor devices and vision systems.

The ARFLEX Consortium is composed by seven partners, as showed in Figure 1: EICAS Automazione S.p.A (Project Coordinator, Italy), COMAU Robotics (Italy), ACTUA S.r.l (Italy), Jozef Stefan Institute (Slovenia), Institute of Mechatronic Systems (Switzerland), the University of Antwerp (Belgium) and the Fraunhofer Institut Produktionsanlagen und Konstruktionstechnik (Germany).

Figure 1: ARFLEX Consortium

The challenging project objective is to overcome the mechanical limitations of the current robotic systems, due to the limited stiffness in the arms, the large imprecision in the coupling joints, and, in general, the non-linearity and friction effects, in order to improve the absolute accuracy positioning of the robot tool centre point (TCP) up to 0.1 mm and the robot flexibility by embedding advanced real-time control techniques, electronic devices, force and vision sensors.

After three years of research and development, the ARFLEX Project has been successful concluded with the achievement of all the objectives in term of accuracy, flexibility, adaptability and low costs. The fulfilment of the expected results represents a further important change in the industrial robots and the relating applications with direct impact in the market of the manufacturing sectors, by extending the use of robots from high volume to lower volume products, shifting manufacturing operations to robots from other machining tools, thus reducing the portion of manual works.

Furthermore, as a by-product, the ARFLEX project will in general have impact on the engineering design approach for products having a relevant mechanical base, pushing for a deeper integration of mechanical engineering, electronics and ITC technologies.

The innovative ARFLEX concept

The mechanical limitations of the conventional robots, like structure

deflections, gearbox backlashes and reduced arm stiffness, prevent high

accuracy performance in positioning the Tool Centre Position (TCP). In

order to correct the resulting error, a suitable sensor system has to be

located after the robot end-effector with the aim to measure the

gap with respect to the reference position.

The ARFLEX system concept, developed by EICAS, consists in measuring directly both position and orientation (hereinafter defined as “pose”) of the robot TCP by means of a suitable multi-camera system (ARFLEX smart cameras) fixed around the robot, and then applying the most advanced real-time control methods for guaranteeing the accuracy and the flexibility/adaptability requirements.

The applied solution is fully innovative, low-cost and able to-operate in an industrial environment in a plug&play approach.

Specifically, the ARFLEX system includes the ARFLEX measurement sensor, able to measure the pose a mobile object fully contact-less. Sophisticated self-calibration procedures make ARFLEX a sensor fully autonomous, able to operate in different working conditions with high-dependability. The self-calibration allows to use low-cost cameras, without the need of expensive calibration procedures.

In addition, powerful data fusion techniques allow to extract the necessary information from the multi-camera system and to cope with real-time constraint (the system operates in real-time at the sample frequency of 100 Hz).

Moreover, advanced multi-input multi-output control loops have been designed for taking into account the non-linearities of the robotic system and the main causes of error due to elasticity, backlash and hysteresis.

The ARFLEX vision system

The multi-camera system, developed in the ARFLEX project, is a key

application for achieving the high accuracy performance of the TCP

absolute positioning. It has

been based on the use of fixed

cameras and passive markers, susceptible to infrared light, distributed on the

robot end-effector. The ARFLEX vision system, equipped with infrared

filters, captures only the marker features of the screenshots relevant for

the pose reconstruction.

Several analysis and specific tests on the proposed vision system were performed by the Jozef Stefan Institute, using the selected passive markers and commercial cameras in order to verify the system feasibility and define requirements for the vision sensor selection and guidelines for camera system design.

Each ARFLEX Smart Camera, showed in Figure 2, has been finally realized by ACTUA based on CMOS sensors and by applying the state-of-art of embedded technologies and integrated circuits.

Figure 2: ARFLEX Smart Camera (ACTUA)

The ARFLEX system works in two operating modes:

· Startup Calibration mode: thanks to the advanced algorithms developed by EICAS, the ARFELX system is able to perform a fully automated self-calibration with the robot and the working environment, without requiring any a-priori accurate knowledge of camera installation;

· Normal Mode: this is the normal operating mode of the ARFLEX system during the robot operating tasks, where the Smart Cameras measure the TCP position and attitude so that the vision control loop can compensate any error and assure the required accuracy.

The ARFLEX self-calibration procedures have been conceived to reject errors due to the non-ideal characteristics of both the vision sensor and the optical lens, in order to guarantee the best performance in all working conditions. Furthermore, the ARFLEX system is able to complete the mission tasks even in case of smart camera failure, thanks to FDIR algorithms (Failure Detection Isolation Recovery) implemented in the core system.

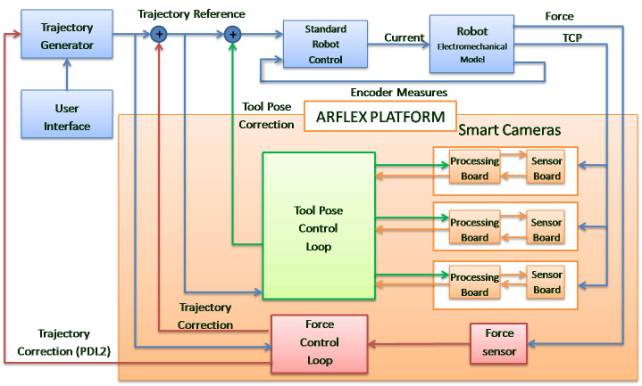

The ARFLEX platform and the multi-hierarchical control architecture

To achieve high performance in terms of accuracy and flexibility, the

ARFLEX system applies a multi-hierarchical control architecture, as showed

in Figure 3, based on visual and non-visual sensors.

Figure 3: The ARFLEX multi-hierarchical control architecture

The hierarchical architecture represents the best approach for the following reasons:

1. it allows keeping an existing robot and the relevant control system essentially unchanged. This is the key point making feasible the ARFLEX approach: it was enough “to open” the existing robot control so that it is able to receive and implement the corrections to the reference trajectories coming from the higher levels ARFLEX controls. The rest of the robot system remains unchanged.

2. it allows designing a system that is modular and distributed at the same time. These are essential requirements in particular for the sensors, that can be distributed or changed depending on the application requirements, and for the system dependability, which is the capability to successfully ending the mission in spite of the presence of component failures.

The conventional robot control system is the lowest level of the ARFLEX architecture and provides the commands to the motors for moving the robot in according to specific tasks. At the higher hierarchical level, the vision control loop receives, at any sample time, the pose measurement and generates the correction to compensate for any positioning error, by allowing the ARFLEX system to reach the required absolute accuracy of 0.1 mm.

The conceived system architecture allows implementing some other control systems at the higher level like the force compliance control loop developed by IPK with the aim to improve the robot flexibility. The robotic system, equipped with a sophisticated force sensor, managed through advanced control algorithms, is able to comply with external forces applied on the robot TCP and modify the trajectories according to them: the robot is able to follow profile changes on a given surface or comply with the forces applied on the robot by the human operator, during a specific task in which the human-machine interaction is concerned.

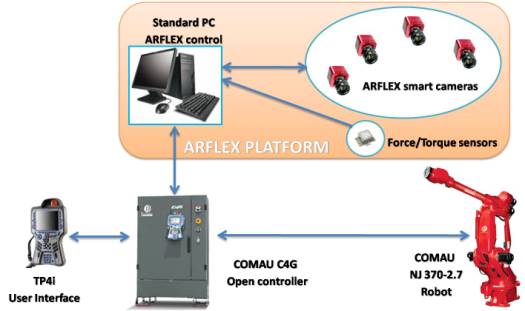

The ARFLEX control approach needs a robot controller able to receive and implement on the robotic system the corrections coming from the superior control levels. The multi-hierarchical control architecture has been realized through the C4G Open Controller put at disposal by COMAU Robotics. In particular, the innovative C4G Open Controller provides a specific operating mode that enables controlling the robot through external signals that, in the ARFLEX context, has been used to apply the correction provided by the ARFLEX system.

The ARFLEX platform (Figure 4), equipped with the smart cameras, the force compliance controls and relevant advanced algorithms, provides all the functionalities for satisfying the high accuracy and flexibility applications required by the market. This approach opens the way for improving the performances of the actual working operations and allows reaching new application fields, not yet accessible by the conventional industrial robots.

Figure 4: The ARFLEX Platform

Embedded Technologies and system integration

The state of the art of embedded technologies has been applied to the Smart Cameras and also to the real time environment, developed for advanced rapid prototyping features.

In the real time environment, all software modules run in real time on the central ARFLEX processor thanks to the real time operating system Linux RTAI.

This software is composed by several complementary parts that work together and draw on multithreading programming features and capabilities. In particular, if the ARFLEX processor has a multi-core architecture, it is possible to specify in which core every thread has to run in order to perform a parallel execution of the code and reduce the elaboration time.

Communication protocols have been developed for realizing specific channels dedicated to data exchange with the ARFLEX Smart Cameras, for vision system management and configuration, and with the C4G Open Controller for programmed operating tasks executions.

The basic software manages all the issues due to communications and interfaces and is the bridge between the external devices (robot open controller and smart cameras) and the internal memories. The basic software is the responsible for the real time execution and for the external data interchanges; it exploits the potentiality of the real time operating system (RTAI) that provides a real time scheduler.

University of Antwerp (UA) conceived, designed and prototyped the hybrid real-time component model based on OSGi and RTAI to support hard real-time tasks while keeping flexibility and adaptability.

This middleware approach supports the UPNP protocol which is used to support sensors and actuator’s plug-and-play functions so that the sensors and the actuators can be managed in a dynamic way. The middleware can be deployed into normal OSGi on RTAI real-time OS.

ARFLEX System Demonstrators

In order to demonstrate the achieved results of ARFLEX project, three robot demonstrators have been developed and setup:

• the TCP High Accuracy Control demonstrator, showed in Figure 6, has been realized at EICAS and COMAU premises in order to demonstrate the performance of the accurate positioning of the ROBOT NJ end-effector;

Figure 6: The TCP High Accuracy demonstrator (EICAS & COMAU)

• the Force Control and Flexibility demonstrator (Figure 7), realized by IPK and COMAU for demonstrating the performance offered by the force compliance control algorithms implemented on a COMAU robot

Figure 7: The Force Control and Flexibility demonstrator (IPK & COMAU)

• the Visual Servoing Experimental platform has been implemented at JSI premises (see Figure 5), in Slovenia, in order to provide preliminary requirements and guidelines for the final ARFLEX vision system design and development;

Figure 5: The Visual Servoing demonstrator (JSI)

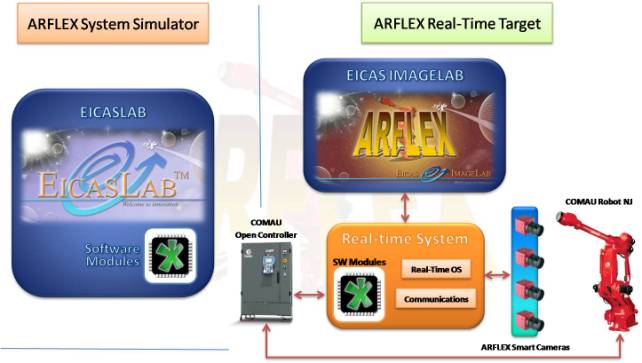

The ARFLEX simulator and the real-time prototyping environments

In order to fulfil the ARFLEX requirements, a very powerful simulation environment has been realized (Figure 8). Based on the EICASLAB platform, the ARFLEX simulator leverages the best features implemented in EICASLAB, the Professional Software Suite for automatic control design and forecasting, developed by EICAS, that offers a fully configurable environment able to support all the development phases, starting from the system design to the testing of the product.

Figure 8: The ARFLEX Real-time Prototyping Environment

The ARFLEX simulator integrates in only one powerful software tool the following real-time and non real-time components:

1. the complete simulation environment (non real-time);

2. the real-time environment, for on-field direct application of the control algorithms.

The ARFLEX simulation environment is based on the EICASLAB Professional Software Suite (www.eicaslab.com) where the following components (HW and SW) are modelled according to the real environment:

• the fine model of the robot, that represents the plant of the ARFLEX system;

• the low-level control algorithms, which control the motors moving the robot joints;

• the vision system components, implementing the model of the ARFLEX Smart Cameras used to measure to robot pose;

• the ARFLEX control algorithms, implementing the high-level distributed control with the aim to correct the robot pose in order to improve the accuracy and fulfil the ARFLEX requirements.

The ARFLEX simulator relies on the multiprocessor architecture and multi-hierarchical level control features provided by EICASLAB, used for conceiving and implementing the distributed control architectures in ARFLEX. The control software is divided into functions that can be allocated to different processors, each one working with its own sampling frequency, phase and duration.

The EICASLAB Suite provides also a suitable environment to design, develop and test real-time control algorithms since the suite is conceived, with scheduling-aware real-time concepts. Every model component (Continuous Plants, Control Area, Mission Area, etc.) can be scheduled depending on the requirements of the system to be modelled and the Scheduling Manager allows fixing time, duration and phase for each simulated block activities.

The control algorithms designed and validated in such an environment, are compliant with the real-time requirements and are naturally predisposed for integration in the hardware platform: in fact, the control algorithm code developed with EICASLAB simulation environment is the same code that is working on-field with the required performances.

The possibility to shift from the prototype to the on-field application without modifying the code developed in the simulation environment (which remains the same), represents the key feature available in the implemented ARFLEX simulator. Once the results obtained in simulation by means of the control tuning satisfy the system requirements, it is trivial to transfer the control algorithms to the real-time environment provided by the ARFLEX simulator that is designed and predisposed to work with the real HW and SW components (robots, open controllers and smart cameras).

The real time environment works on a real time operating system (Linux RTAI) and includes a basic software layer for communication purposes and a graphical Human Machine Interface (HMI) for configuration, calibration and operative mode management realized within the EICAS IMAGELAB.

Advanced graphical HMI (Human Machine Interfaces), communication protocols, real-time operating system (Linux RTAI), middleware functions and software modules are developed to complete the ARFLEX system with the necessary tools for working in real-time and taking control on all the operating tasks.

The ARFLEX system provides also features to register a large amount of data (system variables, states of robot and control system, host commands, measures, etc.) that can be used to perform off-line analysis by means of the EICASLAB Slow Motion View. This feature allows replicating the experimental trials registered on-field, back to the simulation environment where the control algorithms performance can be analysed and verified step by step and variable by variable.

The ARFLEX simulator really provides a complete software suite able to integrate powerful simulation tools, rapid prototyping test environment and the real-time target. The solution is ready to run for on-field application and represents a key feature to improve the way the rapid prototyping is conceived and realized.

The ARFLEX Project demonstrated

The conceived system architecture and the advanced control algorithms developed during the three years duration of the ARFLEX project, demonstrated the change further on the robotic field: the industrial robots, equipped with the ARFLEX system is able to achieve an absolute accuracy of 0.1 mm in the volume of 1x1x1 m, and assures flexibility and adaptability features thanks to the force compliance algorithms implementation.

Today demand exists of industrial robots working with higher accuracy than the best available one. The following application fields and related accuracy demand have been pointed out:

1. aerospace applications (riveting and drilling): 0.1mm positioning accuracy is required,

2. machining robot applications (metal cutting, milling, deburring): about 0.1mm accuracy is required

3. sensor guided robotic laser welding applications: 0.15 mm max error path accuracy is required

The above accuracy requirements correspond to an improvement by a factor of about 10 of the precision offered at present by industrial robots, that – even not considering the precision – would be apt to perform the operations considered.

Moreover, the robot application by SMEs is strongly limited today by the fact that the available industrial robots do not meet the requirements of flexibility and adaptability, which are higher in SMEs than in large industries. In fact, SMEs produce in small batches, which requires to re-program the robots and to adapt them to different manufacturing procedures. That requires to use robots equipped with force and compliance controls for improving flexibility and adaptability needed by SMEs.

With ARFLEX, the needs of large, medium and small enterprises can find a common solution able to satisfy several different scenarios and new interesting opportunities for the industrial robot diffusion are opened.